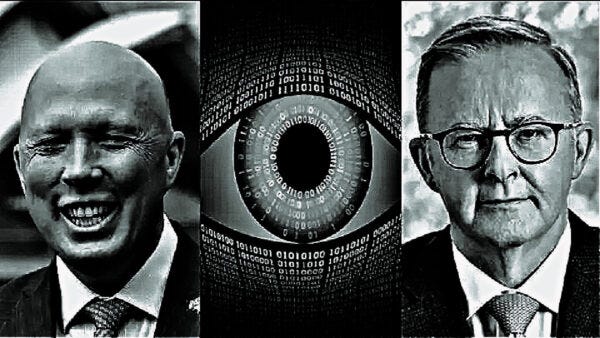

Will New Federal Laws Protect Australians From Disinformation or Serve to Silence Political Dissent?

By Paul Gregoire: Sydney Criminal Lawyers Blog

The freedom of expression the internet has brought to the global community has always posed an issue for governments, as the information they disseminate can now more easily and openly be questioned and critiqued.

This is especially so in this country, now that the mainstream media has become so compliant that ministerial press releases often dictate whole stories, as they’re taken as gospel.

Of course, governments don’t lie, they produce propaganda. And policies and laws have been imposed to combat what’s commonly termed fake news, which have reined in digital platforms, mainstream and nonmainstream media players and individual social media users.

Indeed, since February 2021, the Australian Code of Practice on Disinformation and Misinformation (the DIGI Code), a voluntary set of standards developed on request of our government, has been in place and major digital platforms, such as Facebook and Twitter, have been complying with it.

So, this begs the question as to whom the government is targeting with a new set of laws it proposes to empower the Australian Communications and Media Authority (ACMA) – the statutory body that monitors communications and media – with, especially as they won’t apply to professional news.

Clamping down on the free flow of information

Released on 24 June, the draft exposure of the Communications Legislation Amendment (Combatting Misinformation and Disinformation) Bill 2023 contains the new ACMA laws aimed at curbing the threat mis- and disinformation pose to Australians, and our “democracy, society and economy”.

“The draft framework focuses on systemic issues which pose a risk of harm on digital platforms,” communications minister Michelle Rowland outlined in a 25 June press release. “It does not empower the ACMA to determine what is true or false or to remove individual content or posts.”

“The code and standard-making powers will not apply to professional news content or authorised electoral content,” the minister continues, triggering questions as to just who does determine the truth and who the targeted entities are, since the mainstream media and government are absolved.

Well, whilst the ultimate determiner of truth isn’t being legislated, the entities these new laws will apply to are digital platform services, which include content aggregation services, connective media services, media sharing services or, somewhat liberally, “a digital service specified by the minister”.

So, this includes websites that collate information and present it to end users, including self-produced information, search engines, instant messaging services, social media platforms, podcasting services but it does not apply to internet service providers, text messaging or MMS.

And neither will these powers go as far as to monitor mis- or disinformation that’s being sent via private messages on these services, even though the 2021 ACMA report into digital services, which served to inform the bill’s content, did recommend that such messages be monitored or censored.

The government’s summary of the bill underscores that platforms will not be required “to break encryption or to monitor private messages”.

But not to worry, as current opposition leader Peter Dutton oversaw laws permitting that with the passing of his 2018 Assistance and Access Bill.

Protecting the public against its own thoughts

In his release regarding the bill, shadow communications minister David Coleman warned that “this is a complex area of policy and government overreach must be avoided”. But then he let it slip that it was the Coalition’s idea to introduce such legislation, which it had announced in March last year.

So, what sort of powers will this new bill, which is open for submissions until 6 August, set in place that makes Coleman concerned about overreach in terms of “freedom of speech and expression”, even though it’s set to glide through both houses of parliament with gleeful bipartisan approval?

“Reserve powers to act” is what Rowland’s legislation will provide ACMA with, when digital platform services aren’t correctly monitoring or dealing with mis- or disinformation.

The bill defines misinformation as shared or created “online content that is false, misleading or deceptive”, which wasn’t intentionally deceiving but could “contribute to serious harm”, while disinformation is misinformation shared or created with the intent to fool people.

The bill sets out that harm can be discriminatory hatred against a group, disruption of public order, the integrity of democratic processes of all levels of government, harming the health of people or the environment and, of course, financial harm to individuals, the economy as a whole or a sector of it.

Reserve powers to interfere

In terms of what ACMA will be empowered to do if it considers a digital platform service needs to lift its game, firstly, the statutory body will be able to gather information from a transgressor or require that provider to keep certain records regarding mis- or disinformation and report in if necessary.

ACMA will be able to direct bodies or associations representing sections of the digital platform services industry to develop a code of conduct regarding this type of information, and from there, the government authority can register the set of rules and enforce them.

But if ACMA finds that a digital service provider or parts of the industry aren’t complying with a code or that it’s ineffective, then it can develop and enforce its own industry standard, which will comprise of stronger regulations.

“These actions would generally be applied in a graduated manner, dependent on the harm caused, or risk of harm and could include issuing formal warnings, infringement notices, remedial directions, injunctions and civil penalties,” the summary states, adding that from there it’s a criminal matter.

The bill proposes two criminal offences. The first is noncompliance with a registered code, which carries a maximum fine of $2.75 million or 2 percent of the offending corporation’s global turnover, while individuals would face up to a $550,000 penalty.

But noncompliance with an industry standard is a much more serious offence, which would carry a $6.88 million fine for a corporation or 5 percent of its global turnover, while individuals would be liable to a fine of up to $1.38 million.

“A boot stamping on a human face – forever”

So, with eight major digital platform services in Australia – Adobe, Apple, Meta, Google, Microsoft, Redbubble, TikTok and Twitter – already complying with the DIGI Code, and the crackdowns on fake news and divisive messaging that have already occurred, why are such steep and far-reaching measures needed now?

The laws stipulate that professional news content, or that authorised by federal, state or local governments, as well as any information produced by or for an accredited education provider, will all be exempt.

So, what sort of ongoing onslaught of mis- or disinformation does the Albanese government consider necessitates such severe interventions?

As an example of a health threat, the bill’s summary provides “misinformation that caused people to ingest or inject bleach products to treat a viral infection”, which is an obvious nod to a ridiculous suggestion made by Donald Trump, who was the president of the United States when he made it.

While other examples provided involve misinformation to incite hatred towards a group, “undermining the impartiality of an Australian electoral management body ahead of an election or a referendum” or wrong information about water saving measures during a prolonged drought.

Yet, could it simply be that the government of what the New York Times described as “the world’s most secretive democracy”, is seeking to empower itself with a means to lean on digital platforms that are running defensible dissenting political information that only harms the validity of its own lies?