Tech giant Meta to 'Safeguard' Australian Referendum Integrity, Arbitrate Truth

By Rebekah Barnett: Dystopian Down Under

Meta platforms are a primary source of news for most Australians.

Meta, Facebook’s parent company, is the latest international mega-company to get involved in Australia’s upcoming referendum regarding an Indigenous Voice to Parliament, after Pfizer pledged its support for the Yes campaign earlier this year.

Meta will provide an undisclosed amount in funding to ‘fact-checkers,’ will provide specialised ‘social media safety training’ to MPs and advocacy groups, and will block fake accounts in its bid to, “combat misinformation, voter interference and other forms of abuse on our platforms.”

Meta’s platforms include Facebook, Instagram, Messenger, Whatsapp and new Twitter competitor, Threads.

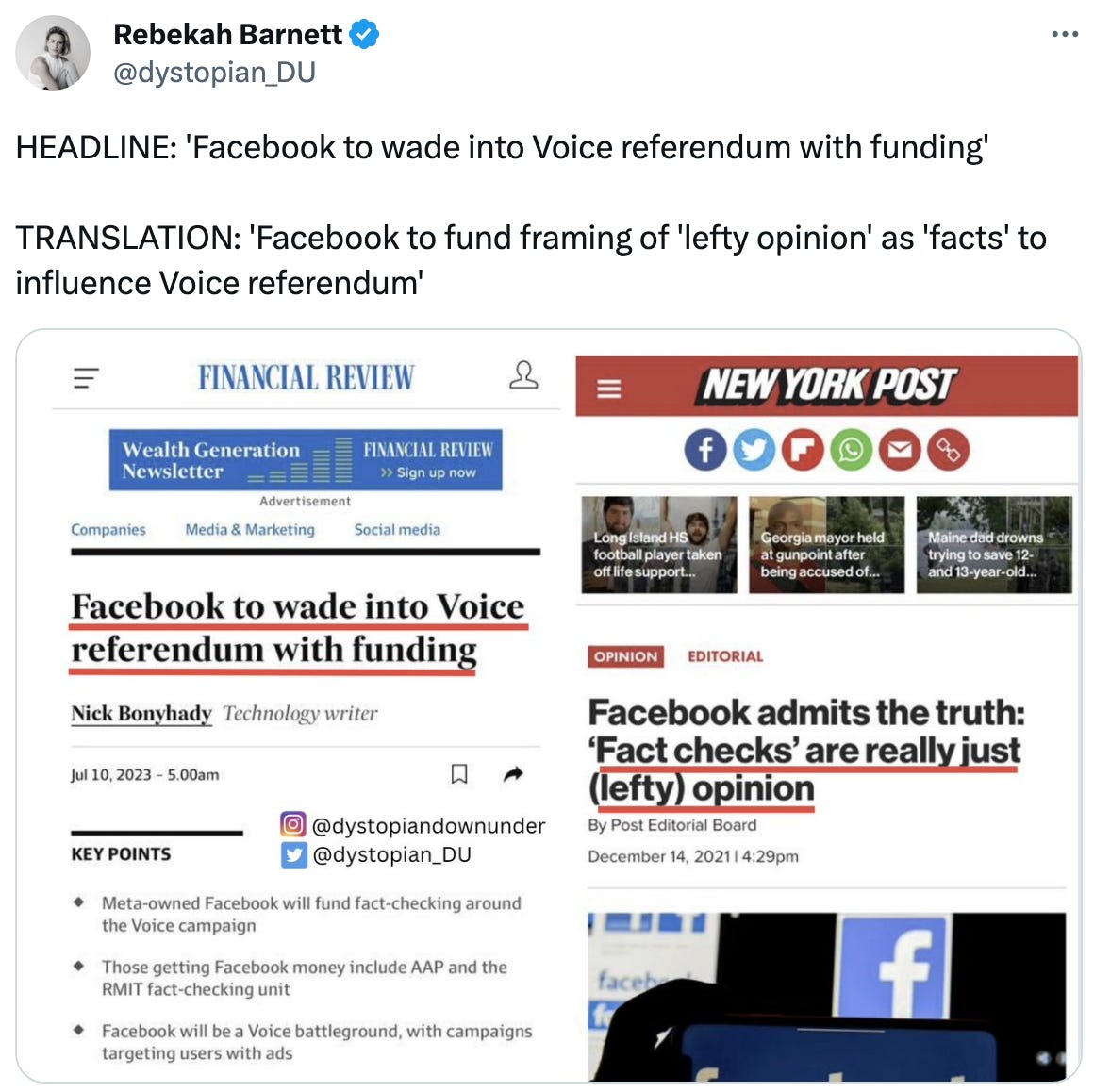

‘Fact-check’ or lefty opinion?

Of particular concern is the funding of fact-checkers to arbitrate what are ‘true facts’ versus ‘misinformation’ on a matter of such import (the referendum proposes the alteration of the Australian constitution). As reported by the New York Post, Facebook admits that its fact-checks are ‘opinions’.

Due to the political leanings of the employees of social media companies and affiliated organisations, these opinions overwhelmingly lean left. This was confirmed in the Twitter Files, in which journalists found that while both sides of the political aisle were subject to censorship on Twitter, conservative views were more likely to be censored.

Fact-checkers named as recipients of Meta money include the Australian Associated Press (AAP) and the Royal Melbourne Institute of Technology (RMIT) ‘fact-checking’ unit, both of which have been responsible for publishing false claims as ‘facts.’

For example, AAP falsely claimed that the Australian Government had not tried to hide reports of Covid vaccine adverse reactions. Documents released under FOI request have revealed that the Therapeutic Goods Administration (TGA) did in fact hide child deaths reported following vaccination, due to concerns that disclosure, “could undermine public confidence.”

In another document release, the Department of Health was shown to have actively sought for the removal of Facebook posts describing users’ adverse reactions to Covid vaccines.

RMIT’s ‘fact-checking’ unit falsely ‘debunked’ claims that Covid vaccines were affecting women’s menstruation. RMIT also falsely claimed that, “evidence overwhelmingly shows that masks and lockdowns do work,” failing to address the many peer-reviewed studies and reports finding the ineffectiveness of masks and lockdowns.

Over and over again, AAP, RMIT and other ‘fact-checkers’ have misrepresented contestable topics as ‘settled science.’ They have conflated the absence of evidence (due to undone science) with categorical evidence of absence. They have ‘debunked’ emerging science based not on alternative findings, but on the mere opinion of their favoured experts. They have consistently parroted government and Big Pharma media statements, even when it was dangerous and insupportable to do so.

Blocking ‘bots’ to crush fake news has previously resulted in proliferation of fake news

Mia Garlick, Meta’s director of public policy for Australia, says, ”We’ve also improved our AI so that we can more effectively detect and block fake accounts… Meta has been preparing for this year’s voice to parliament referendum for a long time, leaning into expertise from previous elections.”

This is not comforting, given the successful weaponisation of such systems to influence public opinion and elections.

High-profile bot conspiracy theories facilitated and perpetuated by social media platforms include the Hamilton 68 dashboard, which falsely accused legitimate right-leaning Twitter accounts of being Russian bots, leading to an avalanche of fake news items about Russian influence on US elections and attitudes.

There is also the disinformation operation 'Project Birmingham’, which created fake Russian Twitter social media accounts to follow Republican candidate Roy Moore in the 2017 Alabama election, resulting in news stories that Russia was backing Moore in the race. In both of these cases, Twitter’s bot-detection and blocking capacities were weaponised to silence legitimate voices, and to influence public opinion and behaviour.

On the weaponisation of AI for political gain, Facebook’s algorithm was instrumental in burying and discrediting the true story of incriminating content found on Hunter Biden’s laptop, a move that many believe influenced the outcome of the 2020 US election. This particular case exemplifies how conspiracy theories seeded by government agencies and think tanks can be amplified by social media platforms.

Mark Zuckerberg, CEO of Meta, said that the FBI initially approached Facebook with a warning about ‘Russian propaganda’ before the laptop story broke, leading Facebook employees to think that the story was fake, and to therefore use AI tools to suppress it. The above-mentioned Russian bot hoaxes provided context that likely reinforced this belief. Whistleblowers have since testified that the FBI was aware of the truth of the story before it seeded fake ‘Russian propaganda’ advice to Facebook.

Meta will foreground Youth Voices - most likely to vote Yes in the Referendum

Meta will foreground youth voices by providing charities including UNICEF with advertisement credits to “raise awareness of voice-related media literacy” and help “raise the voices of a range of young people in support of the voice to parliament including Aboriginal youth”.

Current polling by The Australia Institute suggests that young people are far more likely to vote Yes in the referendum, with 73 per cent of 18-29 year olds currently backing Yes, compared to only 38 per cent of those aged 60 and over.

Experts anticipate that the youth vote is likely to determine the outcome of the referendum after this age group helped to swing the 2022 Federal election.

Funding the foregrounding of youth voices on the Voice may inadvertently privilege the Yes point of view on Meta’s sites, with the potential to influence the referendum outcome.

Meta platforms primary source of news for most Australians

Meta’s social platforms unavoidably form a collective online public square in which much of the debate over the upcoming Voice to Parliament referendum will play out.

Researchers from Canberra University found that, during the pandemic, Facebook was the most used social network site, with 73 per cent of Australians reporting that they had used the platform in the past week. 42 per cent of Australians regularly used Facebook Messenger, and 39 per cent regularly used Instagram. 52 per cent of Australians go to social media for news, and more than 90 per cent of Australians use at least one type of social media or online platform regularly.

The fine line between appropriate moderation and overreach

Meta has the unenviable task of walking the line between necessary moderation (removal of child abuse content) and overreach (de-platforming vaccine injury support groups). This is complex, and not easy to achieve. A scathing exposé by the Wall Street Journal explains just some of the difficulties.

However, profit motive, fear of litigation, government pressure, and sheer size (in 2021, Facebook reported having 40,000 people working on safety and security alone) provide strong counter-influences to Meta making necessary changes.

Censorship and opinion-steering are the easy way out. Big Tech companies can please their major stakeholders, stay on the right side of regulators, profit off users’ data, and expediently silence the rabble who think their speech matters.

To wit, we are lately seeing a trend towards Big Tech companies partnering with governments in ways that prevent robust debate of contestable ideas online, that privilege certain voices over others, and that infringe on the free expression of ordinary Australians.

Vows from big social media platforms to ‘safeguard’ referendum and election integrity, to privilege some voices over others, and to adjudicate what is and is not misinformation and disinformation should be met with deep scepticism. Meta should concentrate on improving its response to human rights abuses facilitated by its platforms.

‘Fact-checkers’, should be exposed for the dishonest grift that they are, to be done away with altogether.

Rebekah Barnett reports from Western Australia. She holds a BA Comms from the University of Western Australia and volunteers for Jab Injuries Australia. When it comes to a new generation of journalists born out of this difficult time in our history, she is one of the brightest stars in the firmament. You can follow her work and support Australian independent journalism with a paid subscription or one off donation via her Substack page Dystopian Down Under.